Aleksandr Davydov / Alamy Stock Photo

After reading this article, you should be able to:

- Appreciate the importance of critical appraisal skills;

- Understand and apply principles of critical appraisal to support evidence-based practice;

- Recognise the different types of studies found in research and their design;

- Determine the quality, value and applicability of a research paper to clinical practice.

Not all data in healthcare research are of equal quality[1]. To incorporate evidence-based medicine (EBM) into practice, pharmacists must be able to assess the quality and reliability of evidence[2]. This requires the development of critical appraisal skills.

Critical appraisal

The critical appraisal of health-related literature by healthcare professionals is a multi-step process that requires[2]:

- Formulation of a question that is important for improving patient health while advancing scientific and medical knowledge;

- Searching the relevant literature to find the best available evidence;

- Appraising research critically to evaluate quality and reliability, as well as applicability to the formulated question;

- Applying the evidence to practice;

- Monitoring the interventions to ensure the outcomes are reproducible and effective.

Assessment and evaluation of publications can be daunting. However, this article aims to assist pharmacists when critically reviewing a research paper to support clinical decision making and evidence-based practice.

This article focuses on the theory behind critical appraisal.

Types of studies in health research

- Cohort studies

- Case-control studies

- Cross-sectional studies

- Randomised clinical trials

- Systematic reviews

- Others

To undertake critical analysis, it is important to first understand the types of studies that are used to generate evidence, and how the data are analysed to provide standardised measurements of outcomes[3]. These can then be compared to evaluate whether an intervention is effective. A summary of the main research studies used in healthcare research, including their advantages and limitations, can be found in Table 1.

The most common types of studies used to report healthcare research include:

Cohort studies

These are observational studies that can either be retrospective (e.g examine historical records) or prospective. Here a group of people are selected for inclusion who do not have the outcome of interest (e.g. exploring the association between major depression and increased risk of advanced complications in type 2 diabetes)[4,5]. Over a period, they are observed to see if they develop the outcome of interest and, therefore, the relative risk can be determined when compared with a control group[6]. One of the biggest problems with cohort studies is the loss of participants (e.g. owing to personal reasons, or their condition not improving post-treatment). This can significantly affect the results and outcomes[7]. Most importantly, cohort studies are the best way to test a hypothesis, without experimental intervention[8].

Case-control studies

A type of observational study and typically retrospective, where patients in a group with a particular outcome of interest are compared with another group that does not have the outcome, but the same degree of exposure as the test group[6,9]. Case-control studies determine the relative importance of a predictor variable in relation to the presence or absence of the disease[6,9]. An example of a case-control study is investigating the association of low serum vitamin D levels with migraine[10].

Cross-sectional studies

These studies commonly employ interviews, questionnaires and surveys to collect data[10]. Although not rigorous enough to assess and measure clinical and medical interventions, they can be used to determine attitudes of a cross-section of the population that is representative of the outcome of interest[11]. For example, one cross-sectional study aimed to identify the main competencies and training needs of primary care pharmacists to inform a National Health Service Executive training programme[11].

Randomised clinical trials (RCT)

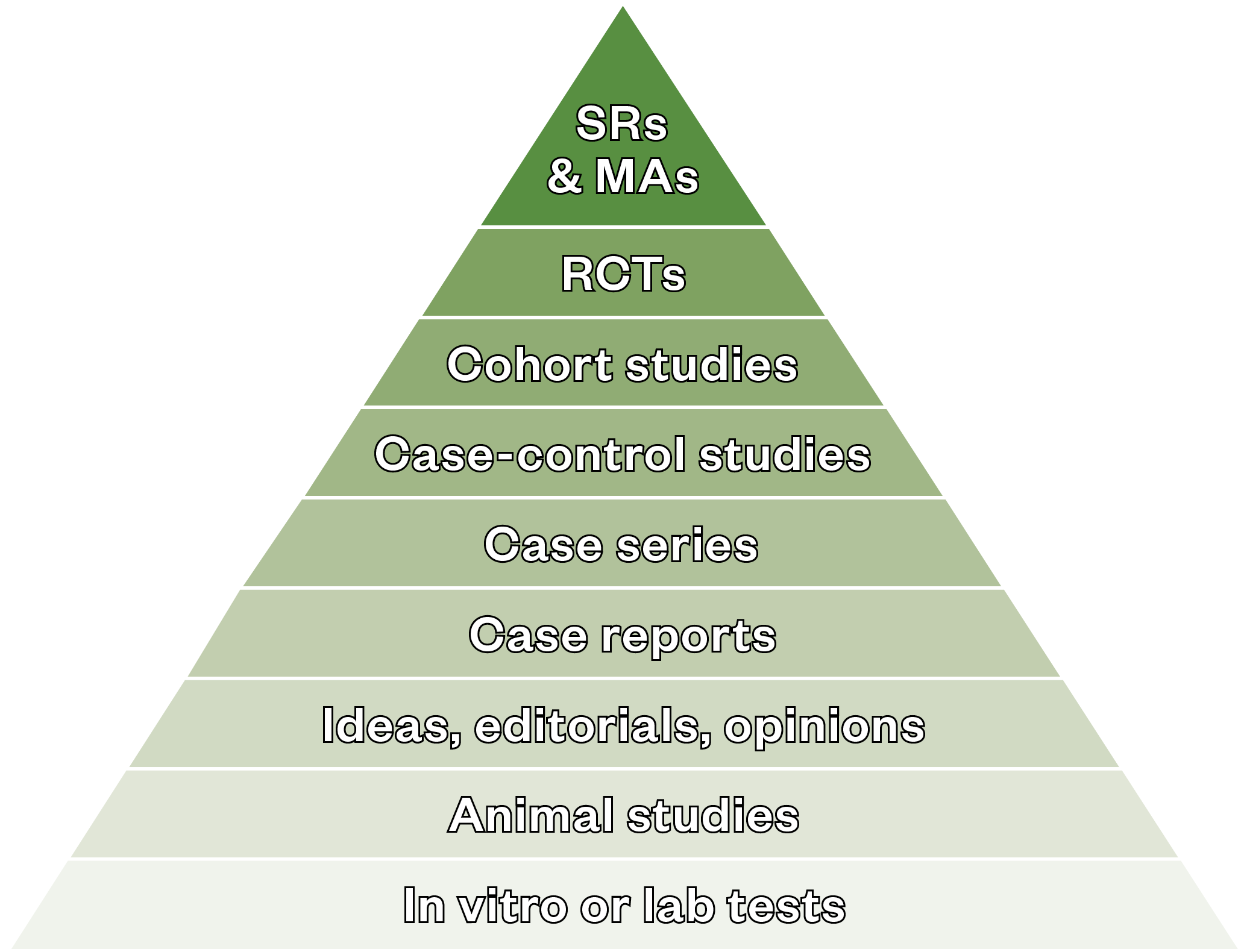

The most rigorous and robust research methods for determining whether a cause–effect relationship exists between a new treatment or intervention and its outcome[12]. Although no study alone is likely to prove causality, randomisation reduces bias and the studies are often blinded, so the clinicians, patients and researchers do not know whether patients are in the control or intervention groups[12,13]. RCTs are considered the gold standard in clinical research studies and are positioned at the top of the evidence pyramid[14] (see Figure).

MA: meta-analyses; SR: systematic reviews.

The greatest advantage of RCTs is the minimisation of bias providing strong clinical evidence, which is favoured by healthcare professionals; however, there are some limitations to this type of study[15] (see Table 1).

Systematic reviews

These studies are robust, thorough and comprehensive. They obtain a more accurate and evidence-based assessment of a research question[16,17]. By comparing a large body of data, from a wide range of sources from primary literature, the results are analysed collectively (e.g. meta-analysis) to assess for consistency and reproducibility[16,17]. Study inclusion is set by an explicit selection criterion and reviews are typically, although not always, quantitatively analysed for statistical significance[17]. Systematic reviews are useful to obtain current, updated information regarding contemporary topics in healthcare. For example, in one review on the safety and efficacy of COVID-19 vaccines, data from several RCTs were analysed and the results were compared to obtain a more justified argument for vaccine use.

Others

Other studies used to gather evidence in healthcare research include:

- i) case studies and case series — focusing on individuals or a collection of cases that are of interest to the author, but does not involve trying to find the answer to a hypothesis;

- ii) qualitative studies — well-suited for investigating the meanings, interpretations, social and cultural norms and perceptions that impact health-related practice and behaviour;

- iii) diagnostic tests — investigate the accuracy of a diagnostic test; it is common to compare to a ‘gold standard’ and measure either the specificity or sensitivity[17–20].

| Type of design | Advantages | Limitations |

| Cohort studies | Likened to natural experiments where large populations are followed over extended periods of time. Alternative to RCTs if they are unsuitable or unethical | High potential for selection bias and confounding factors. Loss of participants can affect the outcome of the study |

| Case-controlled studies | Typically used for investigating risk factors when the outcome of interest is rare and there is a long period between exposure and outcome | Risk of observational and recall bias (as the study is always retrospective), as well as confounding factors |

| Case reports and case series | Can offer invaluable information relating to the benefits and harms of certain treatment, particularly in the absence of experimental designs, such as an RCT | Uncontrolled study designs, known for increased risk of bias with no framework for synthesis and application of evidence |

| Cross-sectional studies | Generally quick, easy and cheap to perform, often involving a questionnaire survey | Types of bias that affects the study: selection bias (can be overcome by a large population of participants), lack of response to questionnaires |

| Randomised controlled trials (RCT) | The process of random allocation and blinding of clinicians, researchers and patients minimises bias considerably | Bias can be introduced if the study participants realise they are taking the actual treatment and subconsciously alter their behaviour. Systematic errors in the design can lead to unreliable results |

| Systematic reviews (SR) | By performing a meta-analysis on the selected studies, data from different studies are combined to assess for reproducibility and consistency | A risk of bias exists from selection of studies and the quality of the primary research to be included in the study. SRs not analysed statistically are prone to bias introduced by the reviewer |

Steps to follow when reviewing an article

Once an article has been identified as relevant to the topic of interest, it is essential to first determine the quality of the study by assessing its appropriateness, including whether the study design was able to answer the hypothesis/research question.

| 1. Determine whether the study addressed a clearly focused issue | What was the main aim/hypothesis of the study? What was the exposure or intervention? Was the research design appropriate? Was the study retrospective or prospective? |

| 2. Identify the study population | What were the inclusion/exclusion criteria? Was the sample selection an accurate representation of a defined population? Were the control and test groups selected from populations comparable in all respects except for the factor under investigation? Were there any losses of study participants? |

| 3. Interpret the results | What was the outcome and how was it measured? Is there clear evidence between exposure and outcome? |

| 4. Assess for bias | Were the main potential confounders identified and considered? How well was the study conducted to minimise the risk of bias and confounding? |

| 5. Determine whether the study can be applied to practice | Are the results of the study directly applicable to patient groups in local practice? |

The following steps outline the main considerations when validating a study and are summarised in Table 2.

1. Determine whether the study addressed a clearly focused issue

The introduction of the article should clearly state the aims and objectives of the research being undertaken, and background information should be provided so the reader understands the reasons why this research is needed, and how the research findings will contribute to advancing clinical and scientific knowledge.

Most research studies will evaluate one of the following:

- Therapy — efficacy of a drug treatment, surgical procedure or other intervention;

- Causation — if a suspected risk factor is related to a particular disease;

- Prognosis — outcome of a disease following treatment/diagnosis;

- Diagnosis — the validity and reliability of a new diagnostic test;

- Screening — test applied to a population to detect disease.

2. Identify the study population

Particular attention must be given to the selection criteria used for RCTs. Exclusion of groups of patient populations can lead to impaired generalisability of results and over-inflation of the outcomes of the study[29]. Women, children, older people and people with medical conditions are often excluded from these studies, so caution must be applied when interpreting the results[30].

Crucial to the selection criteria is that all study participants share common aspects other than the variable being studied so comparisons can be made[23]. For observational studies, such as cohort and cross-sectional, the individuals selected should be an accurate representation of a defined population[31].

3. Interpret the results

Assessing the appropriateness of statistical analysis can be tricky, but for evidence-based practice it is necessary to have a basic understanding of statistics since errors have been known to occur in published manuscripts[28]. The ‘method’ section of the paper should be clear about the rationale for the approach and how the outcomes and results were obtained. The language used should be understandable to the journal’s readership.

There are two main uses for statistics in research. These are to provide general observations and to allow comparisons or conclusions to be made[32,33]. A previous article from The Pharmaceutical Journal offers a basic introduction to statistics, providing a practical overview of differential/inferential statistics and significance testing. These will not be discussed in detail in this article.

4) Assess for bias

Bias can occur at any stage within a research study, and the ability to identify bias is an important skill in critical appraisal because it can lead to inaccurate results. Bias is the systematic (non-random) error in design, conduct or analysis of a study resulting in mistaken estimates. Different study designs require different steps to reduce bias. Bias can occur because of the way populations are sampled, or the way in which data are collected or analysed. Unlike random error, increasing the sample size will not decrease systematic bias[31].

There are many types of bias, but they can be considered under three main categories:

- Selection bias is when the composition of the study subjects or participants in a research project systematically differs from the source population. A simple example would be during recruitment of participants for an influenza vaccine trial, where the participants are healthy adults. However, the sample population is not representative of a cross-section of the general population — missing out children, older people and adults with comorbidities;

- Information bias, or ‘misclassification’, occurs when outcomes, exposures of interest (factors measured) or other data are incorrectly classified or measured. This is particularly problematic in observational studies (cross-sectional, case or cohort studies) where data are gathered using questionnaires, surveys and interviews. The method of data collection is argued as unreliable;

- Confounding is often referred to as a ‘mixing of effects’, where the effects of the exposure under study on a given outcome are mixed in with the effects of an additional factor (or set of factors), resulting in a distortion of the true relationship. Confounding factors may mask an actual association or, more commonly, falsely demonstrate an apparent association between the treatment and outcome when no real association between them exists[34]. For example, alcohol intake has been identified as a cause of increased coronary heart disease[35]. However, there are many confounding factors that ‘blur’ the facts, such as differences in socio-economic and lifestyle characteristics, the type of drink consumed (beer, wine), and the fact that smokers are more likely to drink alcohol than non-smokers. These factors will confound the observed relationship between the amount of alcohol consumed and risk[36].

5) Determine whether the study can be applied to practice

Pharmacy professionals can determine the applicability of study results to clinical practice by:

- Comparing research results to relevant guidelines (e.g. National Institute for Health and Care Excellence);

- Identifying whether local or national clinical policies exist that are supported by EBM;

- Discussing recommendations and the applicability of research findings with colleagues and peers;

- Summarising and critically appraising the various interventions studied in relevant clinical trials and studies;

- Evaluating the cost-effectiveness of the interventions[37].

Critical appraisal skills are necessary to extract the most relevant and useful information from published literature and it is the duty of all healthcare professionals to keep up to date with current research to identify gaps in knowledge and to ensure optimal patient outcomes. It is also particularly beneficial for pharmacists, as demand for such skills increases with the rise in opportunities to deliver advanced clinical services.

Additional resources — critical appraisal tools

Several user-friendly tools are available to assist individuals with developing critical appraisal skills. Table 3 summarises a selection of useful websites that provide checklists and guidance on critical appraisal skills.

| Study design | Tool available | Website |

| Cohort studies | *CASP cohort tool aSIGN bSTROBE | https://casp-uk.net/casp-tools-checklists/ https://www.sign.ac.uk/what-we-do/methodology/checklists/ https://www.equator-network.org/reporting-guidelines/strobe/ |

| Case-controlled studies | CASP SIGN STROBE | https://casp-uk.net/casp-tools-checklists/ https://www.sign.ac.uk/what-we-do/methodology/checklists/ https://www.equator-network.org/reporting-guidelines/strobe/ |

| Cross-sectional studies | STROBE | https://www.equator-network.org/reporting-guidelines/strobe/ |

| Randomised control trials | cCEBM SIGN | https://www.cebm.ox.ac.uk/resources/ebm-tools/critical-appraisal-tools https://www.sign.ac.uk/what-we-do/methodology/checklists/ |

| Systematic reviews | CEBM CASP SIGN | https://www.cebm.ox.ac.uk/resources/ebm-tools/critical-appraisal-tools https://casp-uk.net/casp-tools-checklists/ https://.sign.ac.uk/what-we-do/methodology/checklists/ |

| General | dCCAT | https://conchra.com.au/2015/12/08/crowe-critical-appraisal-tool-v1-4/ |

Table 3: Critical appraisal tools

References

- 1Simera I, Moher D, Hoey J, et al. A catalogue of reporting guidelines for health research. European Journal of Clinical Investigation 2010;40:35–53. doi:10.1111/j.1365-2362.2009.02234.x

- 2Umesh G, Karippacheril J, Magazine R. Critical appraisal of published literature. Indian J Anaesth 2016;60:670–3. doi:10.4103/0019-5049.190624

- 3Peinemann F, Tushabe D, Kleijnen J. Using multiple types of studies in systematic reviews of health care interventions–a systematic review. PLoS One 2013;8:e85035. doi:10.1371/journal.pone.0085035

- 4Song J, Chung K. Observational studies: cohort and case-control studies. Plast Reconstr Surg 2010;126:2234–42. doi:10.1097/PRS.0b013e3181f44abc

- 5Lin EHB, Rutter CM, Katon W, et al. Depression and Advanced Complications of Diabetes: A prospective cohort study. Diabetes Care 2009;33:264–9. doi:10.2337/dc09-1068

- 6Mann C. Observational research methods. Research design II: cohort, cross sectional, and case-control studies. Emerg Med J [Internet] 2003;20:54–60.http://emj.bmj.com/content/20/1/54.abstract

- 7Fogel D. Factors associated with clinical trials that fail and opportunities for improving the likelihood of success: A review. Contemp Clin Trials Commun 2018;11:156–64. doi:10.1016/j.conctc.2018.08.001

- 8Morrow B. An overview of cohort study designs and their advantages and disadvantages. International Journal of Therapy and Rehabilitation 2010;17:518–23. doi:10.12968/ijtr.2010.17.10.78810

- 9Lu C. Observational studies: a review of study designs, challenges and strategies to reduce confounding. Int J Clin Pract 2009;63:691–7. doi:10.1111/j.1742-1241.2009.02056.x

- 10Levin KA. Study design III: Cross-sectional studies. Evid Based Dent 2006;7:24–5. doi:10.1038/sj.ebd.6400375

- 11Jesson J. Cross-sectional studies in prescribing research. J Clin Pharm Ther 2001;26:397–403. doi:10.1046/j.1365-2710.2001.00373.x

- 12Bhide A, Shah PS, Acharya G. A simplified guide to randomized controlled trials. Acta Obstet Gynecol Scand 2018;97:380–7. doi:10.1111/aogs.13309

- 13Hariton E, Locascio JJ. Randomised controlled trials – the gold standard for effectiveness research. BJOG: Int J Obstet Gy 2018;125:1716–1716. doi:10.1111/1471-0528.15199

- 14Mulimani PS. Evidence-based practice and the evidence pyramid: A 21st century orthodontic odyssey. American Journal of Orthodontics and Dentofacial Orthopedics 2017;152:1–8. doi:10.1016/j.ajodo.2017.03.020

- 15Deaton A, Cartwright N. Understanding and misunderstanding randomized controlled trials. Social Science & Medicine 2018;210:2–21. doi:10.1016/j.socscimed.2017.12.005

- 16Chandler J, Hopewell S. Cochrane methods – twenty years experience in developing systematic review methods. Syst Rev 2013;2. doi:10.1186/2046-4053-2-76

- 17Murad MH, Sultan S, Haffar S, et al. Methodological quality and synthesis of case series and case reports. BMJ EBM 2018;23:60–3. doi:10.1136/bmjebm-2017-110853

- 18Munn Z, Barker TH, Moola S, et al. Methodological quality of case series studies: an introduction to the JBI critical appraisal tool. JBI Evidence Synthesis 2019;18:2127–33. doi:10.11124/jbisrir-d-19-00099

- 19Daly J, Willis K, Small R, et al. A hierarchy of evidence for assessing qualitative health research. Journal of Clinical Epidemiology 2007;60:43–9. doi:10.1016/j.jclinepi.2006.03.014

- 20Gluud C, Gluud LL. Evidence based diagnostics. BMJ 2005;330:724–6. doi:10.1136/bmj.330.7493.724

- 21Rochon PA, Gurwitz JH, Sykora K, et al. Reader’s guide to critical appraisal of cohort studies: 1. Role and design. BMJ 2005;330:895–7. doi:10.1136/bmj.330.7496.895

- 22Mamdani M, Sykora K, Li P, et al. Reader’s guide to critical appraisal of cohort studies: 2. Assessing potential for confounding. BMJ 2005;330:960–2. doi:10.1136/bmj.330.7497.960

- 23Young JM, Solomon MJ. How to critically appraise an article. Nat Rev Gastroenterol Hepatol 2009;6:82–91. doi:10.1038/ncpgasthep1331

- 24Sutton-Tyrrell K. Assessing bias in case-control studies. Proper selection of cases and controls. Stroke 1991;22:938–42. doi:10.1161/01.str.22.7.938

- 25Sedgwick P. Bias in observational study designs: cross sectional studies. BMJ 2015;350:h1286–h1286. doi:10.1136/bmj.h1286

- 26Pannucci CJ, Wilkins EG. Identifying and Avoiding Bias in Research. Plastic and Reconstructive Surgery 2010;126:619–25. doi:10.1097/prs.0b013e3181de24bc

- 27Siedlecki SL. Understanding Descriptive Research Designs and Methods. Clin Nurse Spec 2020;34:8–12. doi:10.1097/nur.0000000000000493

- 28Mulrow CD. Systematic Reviews: Rationale for systematic reviews. BMJ 1994;309:597–9. doi:10.1136/bmj.309.6954.597

- 29Littlewood C. The RCT means nothing to me! Manual Therapy 2011;16:614–7. doi:10.1016/j.math.2011.06.006

- 30Van Spall HGC, Toren A, Kiss A, et al. Eligibility Criteria of Randomized Controlled Trials Published in High-Impact General Medical Journals. JAMA 2007;297:1233. doi:10.1001/jama.297.11.1233

- 31Pinchbeck GL, Archer DC. How to critically appraise a paper. Equine Vet Educ 2018;32:104–9. doi:10.1111/eve.12896

- 32Greenhalgh T. How to read a paper: Statistics for the non-statistician. I: Different types of data need different statistical tests. BMJ 1997;315:364–6. doi:10.1136/bmj.315.7104.364

- 33Greenhalgh T. How to read a paper: Statistics for the non-statistician. II: ‘Significant’ relations and their pitfalls. BMJ 1997;315:422–5. doi:10.1136/bmj.315.7105.422

- 34Skelly A, Dettori J, Brodt E. Assessing bias: the importance of considering confounding. Evidence-Based Spine-Care Journal 2012;3:9–12. doi:10.1055/s-0031-1298595

- 35Emberson JR, Bennett DA. Effect of alcohol on risk of coronary heart disease and stroke: causality, bias, or a bit of both? Vascular Health and Risk Management 2006;2:239–49. doi:10.2147/vhrm.2006.2.3.239

- 36Corrao G, Rubbiati L, Bagnardi V, et al. Alcohol and coronary heart disease: a meta-analysis. Addiction 2000;95:1505–23. doi:10.1046/j.1360-0443.2000.951015056.x

- 37Lewis SJ, Orland BI. The Importance and Impact of Evidence Based medicine. JMCP 2004;10:S3–5. doi:10.18553/jmcp.2004.10.s5-a.s3

You might also be interested in…

UK Clinical Pharmacy Association launches pharmacogenomics guidance

Health news round-up: cardiovascular disease, women’s health and the potential for new sepsis treatment